As a user, how often have you thought “I wish this web service was faster.† As a CEO, how often have you said “just make it faster.† Or, more simply, “why is this damn thing so slow?â€

This is a not a new question.  I’ve been thinking about this since I first started writing code (APL) when I was 12 (ahem – 33 years ago) on a computer in the basement of a Frito-Lay data center in Dallas.

This morning, as part of my daily information routine, I came across a brilliant article by Carlos Bueno, an engineer at Facebook, titled “The Full Stack, Part 1.† In it, he starts by defining a “full-stack programmer“:

“A “full-stack programmer†is a generalist, someone who can create a non-trivial application by themselves. People who develop broad skills also tend to develop a good mental model of how different layers of a system behave. This turns out to be especially valuable for performance & optimization work.â€

He then dissects a simple SQL query (DELETE FROM some_table WHERE id = 1234;) and gives several quick reasons why performance could vary widely when this query is executed.

It reminded me of a client situation from my first company, Feld Technologies.  We were working on a logistics project with a management consulting firm for one of the largest retail companies in the world.  The folks from the management consulting firm did all the design and analysis; we wrote the code to work with the massive databases that supported this.  This was in the early 1990′s and we were working with Oracle on the PC (not a pretty thing, but required by this project for some reason.)  The database was coming from a mainframe and by PC-standards was enormous (although it would probably be considered tiny today.)

At this point Feld Technologies was about ten people and, while I still wrote some code, I wasn’t doing anything on this particular project other than helping at the management consulting level (e.g. I’d dress up in a suit and go with the management consultants to the client and participate in meetings.)  One of our software engineers wrote all the code.  He did a nice job of synthesizing the requirements, wrestling Oracle for the PC to the ground (on a Novell network), and getting all the PL/SQL stuff working.

We had one big problem.  It took 24 hours to run a single analysis.  Now, there was no real time requirement for this project – we might have gotten away with it if it took eight hours as we could just run them over night.  But it didn’t work for the management consultants or the client to hear “ok – we just pressed go – call us at this time tomorrow and we’ll tell you what happened.† This was especially painful once we gave the system to the end client whose internal analyst would run the system, wait 24 hours, tell us the analysis didn’t look right, and bitch loudly to his boss who was a senior VP at the retailer and paid our bills.

I recall having a very stressful month.  After a week of this (where we probably got two analyses done because of the time it took to iterate on the changes requested by the client for the app) I decided to spend some time with our engineer who was working on it.  I didn’t know anything about Oracle as I’d never done anything with it as a developer, but I understood relational databases extremely well from my previous work with Btrieve and Dataflex.  And, looking back, I met the definition of a full-stack programmer all the way down to the hardware level (at the time I was the guy in our company that fixed the file servers when they crashed with our friendly neighborhood parity error or Netware device driver fail to load errors.)

Over the course of a few days, we managed to cut the run time down to under ten minutes. Â My partner Dave Jilk, also a full-stack programmer (and a much better one than me), helped immensely as he completely grokked relational database theory. Â When all was said and done, a faster hard drive, more memory, a few indexes that were missing, restructuring of several of the SELECT statements buried deep in the application, and a minor restructure of the database was all that was required to boost the performance by 100x.

When I reflect on all of this, I realize how important it is to have a few full-stack programmers on the team.  Sometimes it’s the CTO, sometimes it the VP of Engineering, sometimes it’s just someone in the guts of the engineering organization.  When I think of the companies I’ve worked with recently that are dealing with massive scale and have to be obsessed with performance, such as Zynga, Gist, Cloud Engines, and SendGrid I can identify the person early in the life of the company that played the key role. And, when I think of companies that did magic stuff like Postini and FeedBurner at massive scale, I know exactly who that full system programmer was.

If you are a CEO of a startup, do you know who the full-stack programmer on your team is?

We wouldn't fret over monopoly so much if it came with a term limit. If Facebook's rule over social networking were somehow restricted to, say, 10 years--or better, ended the moment the firm lost its technical superiority--the very idea of monopoly might seem almost wholesome. The problem is that dominant firms are like congressional incumbents and African dictators: They rarely give up even when they are clearly past their prime. Facing decline, they do everything possible to stay in power. And that's when the rest of us suffer.

We wouldn't fret over monopoly so much if it came with a term limit. If Facebook's rule over social networking were somehow restricted to, say, 10 years--or better, ended the moment the firm lost its technical superiority--the very idea of monopoly might seem almost wholesome. The problem is that dominant firms are like congressional incumbents and African dictators: They rarely give up even when they are clearly past their prime. Facing decline, they do everything possible to stay in power. And that's when the rest of us suffer.

A 70-page document (PDF) showing the TSA's procurement specifications, classified as "sensitive security information," says that in some modes the scanner must "allow exporting of image data in real time" and provide a mechanism for "high-speed transfer of image data" over the network. (It also says that image filters will "protect the identity, modesty, and privacy of the passenger.")

A 70-page document (PDF) showing the TSA's procurement specifications, classified as "sensitive security information," says that in some modes the scanner must "allow exporting of image data in real time" and provide a mechanism for "high-speed transfer of image data" over the network. (It also says that image filters will "protect the identity, modesty, and privacy of the passenger.")

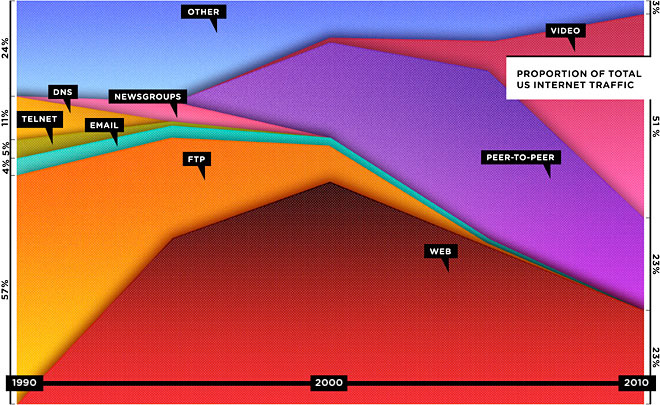

Their feature,

Their feature,